Tech Trends and Insights from Silicon Valley: A Glimpse into the Future

Gain firsthand insights from Silicon Valley’s top visionary founders, investors, and industry leaders on the AI, robotics, and venture capital trends shaping the valley.

The cost of computing has gone down exponentially over the past century – driven by generations of new hardware as well as software innovations, each driving the accelerated descent of the cost curve. As $ cost per calculation falls, so demand for compute goes up, creating unprecedented tech paradigms and their related applications – from the PC to the web to smartphones & cloud computing to now, AI – each changing our lives and giving birth to a fresh set of era-defining tech companies. Expect the current AI wave to follow similar patterns.

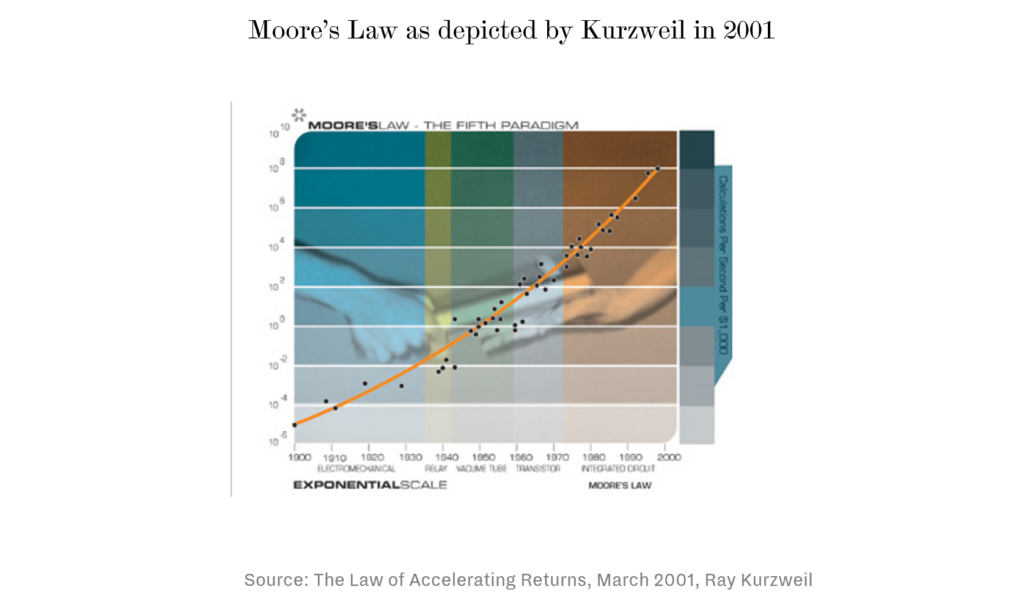

Back in 2002, when the venture capital world was in the depths of its largest nuclear winter, when it felt uncertain whether it would ever recover from the dot-com bubble bursting, an inventor, entrepreneur and writer named Ray Kurzweil came to present his new article to our venture capital fund. Among the various anxieties venture capitalists faced at the time was the potential end of Moore’s Law. (Gordon Moore, a founder of Intel, famously predicted that the number of transistors on an integrated circuit chip would double every two years, giving us cheaper and more powerful computers for years to come). As transistors had gotten to the point of being crammed within 100 nanometers of each other by the 2000’s, technologists worried that it would be physically impossible for them to get any closer to each other, as the spacing was approaching its physical lower limits, bound by the size of atoms. Clearly, Moore’s Law would end, and the plateauing of computing performance would put a big dent in innovation.

Kurzweil’s genius was to debunk this fear by abstracting away from specificities of integrated circuit technology, and to look instead at all technologies, starting with electro-mechanical systems in 1900’s, through to vacuum tubes and then integrated circuits (i.e., the chips of today). The vacuum tubes of the early 20th century also had physical limitations so you could have also predicted the plateauing of technology back then, by focusing on the limitations of vacuum tubes. However, those limitations were obviously superseded by new more powerful technology shifts, notably the transistor and integrated circuits. Kurzweil pointed out that it didn’t really matter what technology humans used – even if the number of transistors on chips wouldn’t double every two years – what mattered was that the number of transactions that could be performed per dollar of computing capacity would keep increasing exponentially. Today, even if we assume that the Moore’s Law is dead (despite the state-of-the-art chip technology going from Intel Pentium’s 90 nanometer process in 2001 to reach Apple’s latest 3 nanometer processes, something which was deemed impossible at the time) other new technologies such as ARM’s RISC architecture, and Nvidia’s GPU’s have introduced innovations that have continued to extend Kurzweil’s curve irrespective of Moore’s Law. Even if Moore’s Law is dead and transistor spacing doesn’t get any closer than 3 nanometers, (Nvidia’s) Huang’s Law and other innovations are continuing to make sure that the number of transactions we can perform per dollar of chip capacity will keep growing exponentially.

Moore’s law seen as a supply curve, highlights the boost in demand for compute

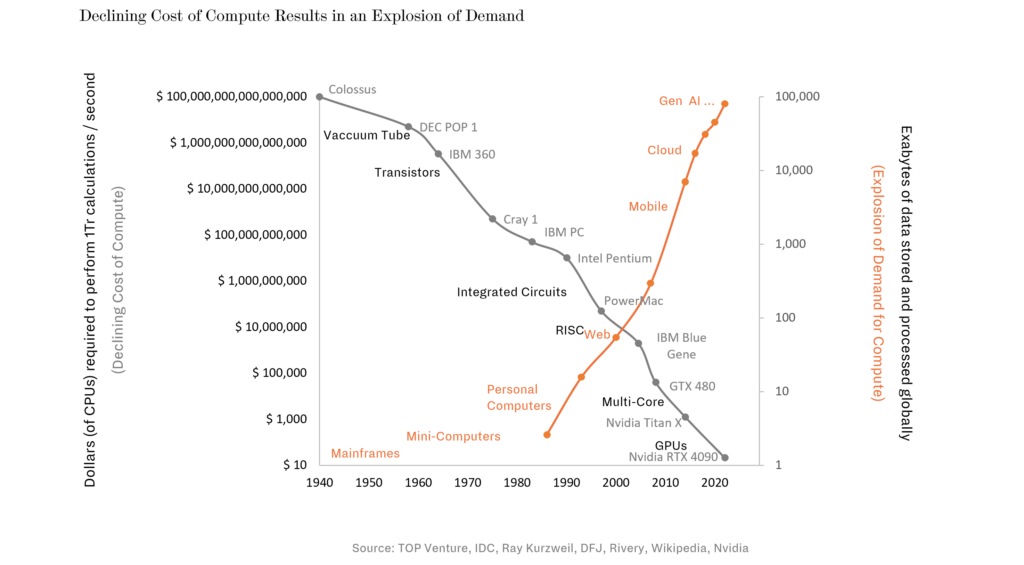

Yet, as ingenious a technologist as he may be, Kurzweil was not an economist, and an economist might point out that Kurzweil’s curve should be looked at differently, as a cost curve (which goes down and to the right, instead of going up and to the right). Rather than thinking about the number of transactions per second that can be done for $x of computing power, it might be better to picture the same line the other way around, and look at the dollar cost of processing, say 1 trillion calculations, as plotted below. Looked at from this angle, we can see that in the 1940’s, for the Colossus (the computer famously used by Alan Turing during WWII to break the Nazi’s encryption codes), it would have taken 100 million-billion dollars to perform 1 trillion transactions per second. By the 1990’s, with Intel’s first-generation Pentium chip, the cost had fallen to $1B, and today, using Nvidia’s GPU’s it takes merely $20 to perform the same amount of calculations per second.

Meanwhile, the cost of other technologies such as the cost of storing data, and the cost of communications have seen similar reductions.

As any economics student would tell you, as costs come down, so does demand go up. And so, it has been: the amount of ‘compute’ the world consumes has also increased exponentially over the past decades. Waves of new applications have been created to take advantage of those lower costs. Be they the latest servers running AI models on Nvidia chips, or the supercomputers (aka smartphones) which we all have in our pockets! These use-cases are all increasing the amount of computing done in the world. As seen in the chart above, this exponential explosion in demand is reflected in the total amount of data that is processed globally – what IDC calls the datasphere, which has gone from single digit exabytes in the last millennium, to 100’s of exabytes in the 2000’s, and to 80,000 exabytes today – that is 80 Trillion Gigabytes, equivalent to the storage capacity of 600 billion modern iPhones!

Underlying this explosion of data usage are tectonic shifts in the application platforms that are consuming that data, from the mainframes of the 50’s, to the personal computers of the 80’s, the web, the cloud, mobile computing, and now AI. None of these shifts would have been possible without the dramatic reduction in the cost of computing. The personal computer would have been unaffordable using mainframe chip technology. Cloud computing would not be viable using Intel Pentium technology. The iPhone would have been unusable with Apple’s PowerPC chip from the 90’s, and AI models would have been way too time consuming to train without Nvidia’s GPU’s.

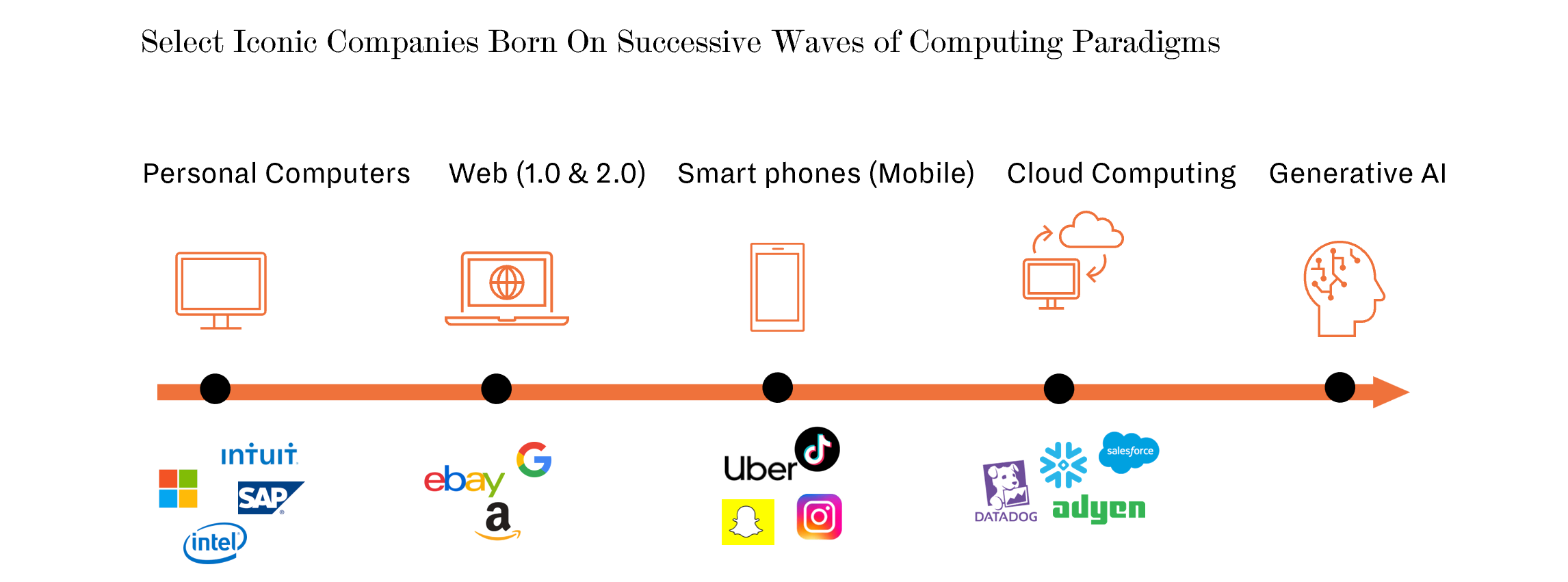

What is also clear is that each of these tectonic shifts in technology platforms have enabled new waves of tech companies to emerge and thrive by developing new usage paradigms based on the new cost base. The mainframe wave gave birth to IBM’s monopoly in the tech sector; the PC wave begat the dominating Microsoft-Intel partnership as well as the then upstart Apple, and many other standalone companies like Intuit and AMD; Google and Amazon jumped on the new wave created by the web; Instagram, Uber and Snap could only exist with the advent of the mobile computing wave; and the Cloud Computing wave allowed thousands of companies to harness its power and create new SaaS products at zero distribution cost. This spurred the move to always-on-connectivity and created the need for the likes of Datadog and Snowflake to become its data lifeguards.

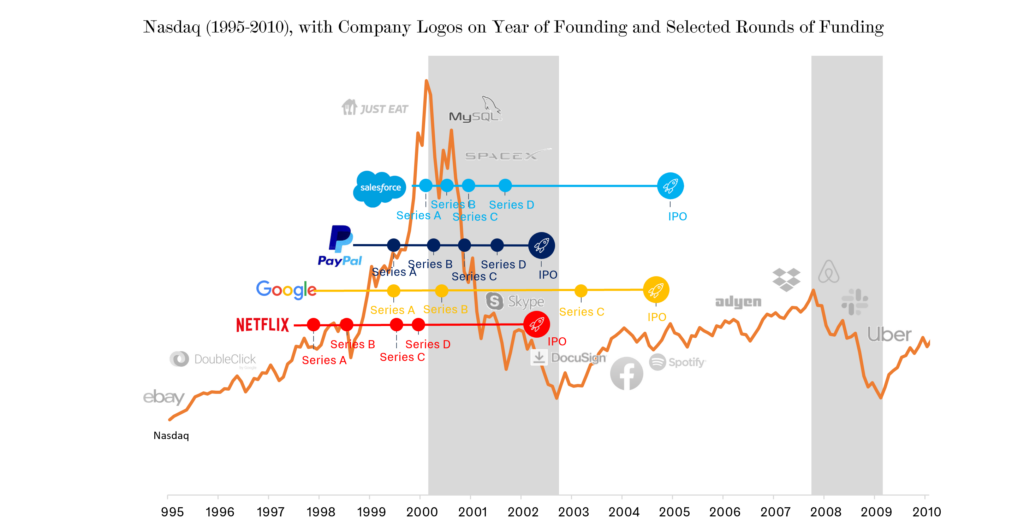

When Kurzweil presented his exponential graphs in 2001, he predicted an age of singularity where computers would become more intelligent than humans, and ultimately become indistinguishable from them. At the time, this prediction seemed outlandish. The dot-com bubble had burst and Moore’s Law was about to end (!) – how could there be room for futuristic predictions and techno-optimism? Yet, a few months after the publication of Kurzweil’s paper, even while public stock markets continued to plummet, venture capitalists funded an up-and-coming company called Salesforce at a $300M valuation. And a little later, just as the NASDAQ was hovering at its lows, a company called Google, which Yahoo had refused to buy a year earlier for a mere $1M, also raised a round of funding to help it expand a newly blossoming revenue stream called search ads. PayPal, now a $60B juggernaut, IPO’d at a $800M valuation, raising just $70M.

Today, as the technology and venture capital worlds grapple with the hangover of the lead up to 2021, the world continues to debate the death of Moore’s Law, much as it did in 2001; and while Kurzweil’s singularity may seem closer to us now given the advent of AI technology, we are still examining it, perhaps with a greater sense of urgency. Some argue that AI will result in rogue super-intelligent robots. Others argue that AI will bring in a new industrial revolution and allow us all to be smarter. Whatever form this takes, there is little doubt that Kurzweil’s scaling laws will continue as they have for the past 100+ years, with the cost of compute decreasing exponentially, and that 5 years from now, computers and AI will be orders of magnitude more powerful than they are today, creating a new tectonic shift in our societies. McKinsey predicts that generative AI could add $2.6-4.1 Tr to the world economy – like adding a new United Kingdom (GDP 3.1Tr) to the world – and Goldman believes that 25% of US and European work tasks will be automated by AI. One doesn’t have to believe in the singularity to understand that the changes brought on by AI will be immense, and that these shifts will enable new generations of tech giants to be born and to thrive and to bring new innovations that will, in one way or another, change our lives.

As you read this today, Entrepreneurs are busy founding the companies that aim to bring us that future, and that will become tomorrow’s iconic tech giants; and venture capitalists are no doubt hustling to find and fund the companies they decide to bet on as future incumbents of this new wave. Much as we are amazed that Salesforce, PayPal, and Google could have been such fledglings only 2 decades ago, in 20 years we will no doubt look back with amazement at the early days of the current shift being brought upon us by the new wave of AI-driven technologies, and the stellar returns that were made by those who invested in them.

If you have any questions about the themes discussed in this article or TOP Funds – our Technology Opportunity Partners, please do not hesitate to get in contact with us: info@bedrockgroup.ch

Authors: Richard Rimer and Salman Farmanfarmaian, TOP Funds